Summarizing our own paper with RUM. Animation credit: Tsveta Tomova

Abstract

Stacking long short-term memory (LSTM) cells or gated recurrent units (GRUs) as part of a recurrent neural network (RNN) has become a standard approach to solving a number of tasks ranging from language modeling to text summarization. While LSTMs and GRUs were designed to model long-range dependencies more accurately than conventional RNNs, they nevertheless have problems copying or recalling information from the long distant past. Here, we derive a phase-coded representation of the memory state, Rotational Unit of Memory (RUM), which unifies the concepts of unitary learning and associative memory. We show experimentally that RNNs based on RUMs can solve basic sequential tasks such as memory copying and memory recall much better than LSTMs/GRUs. We further demonstrate that by replacing LSTM/GRU with RUM units we can apply neural networks to real-world problems such as language modeling and text summarization, yielding results comparable to the state of the art.

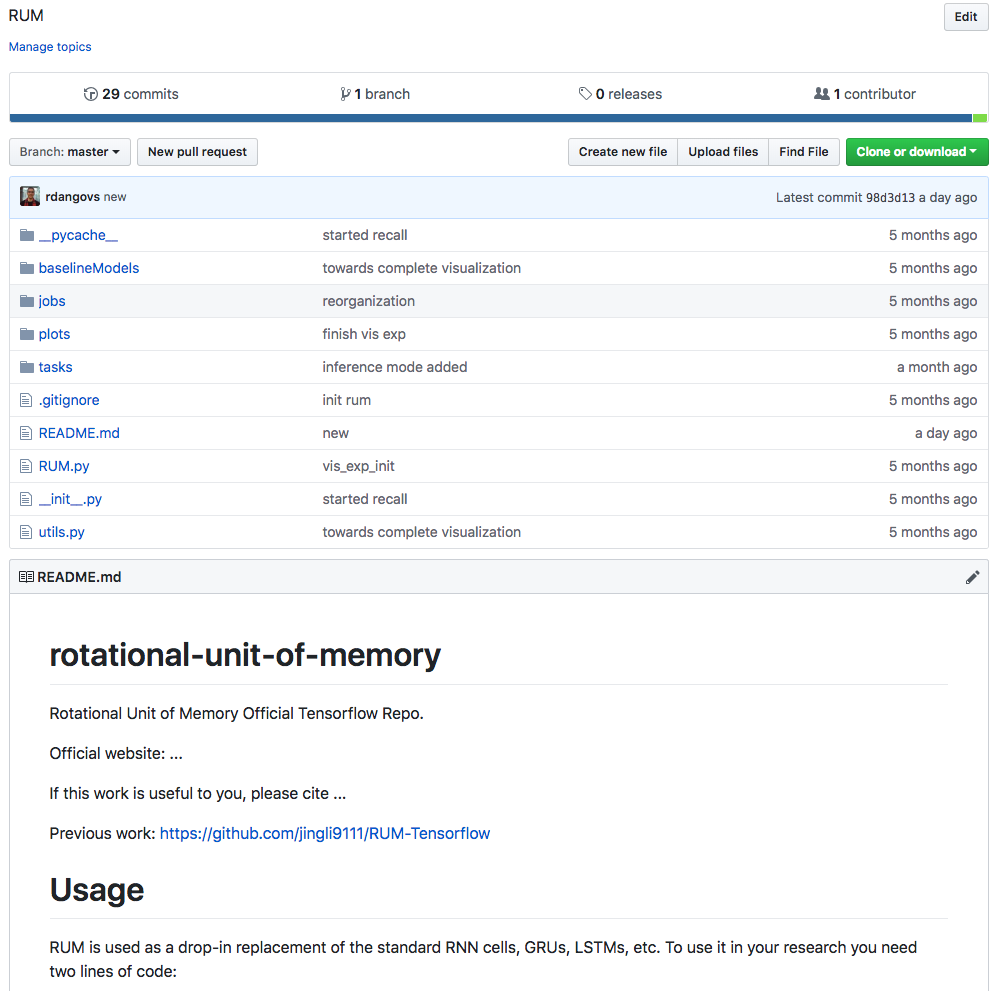

Using RUM

If you have just built a model with RNN units (LSTMs, GRUs, etc.) you can replace the units with RUM. Alternatively, if you want to approach a task demanding copying and recall abilities, you can directly build a net with RUMs. Finally, the concept of rotating the hidden state can be applied outside of the RNN domain: our code has functionality for rotations that you can incorporate in your models.

Next steps

We are excited about building robust phase-coded models and tackling new applications. If you have questions or ideas shoot us an email. :)

Demo

Different model's behavior on Copying memory task. The vector represents the hidden state of each model through time. They show different behaviors on reading, waiting and writing period.

Citation

@article{dangovski2019,title={Rotational unit of memory: a novel representation unit for {RNN}s with scalable applications},

author={Dangovski, Rumen and Jing, Li and Nakov, Preslav and Tatalovi\'{c}, Mi\'{c}o and Solja\v{c}i\'{c}, Marin},

journal={Transaction of the Association of Computational Linguistics},

volume={7},

pages={121--138},

year={2019}

}