Demo

Different model's behavior on Copying memory task. The vector represents the hidden state of each model through time. They show different behaviors on reading, waiting and writing period.

Abstract

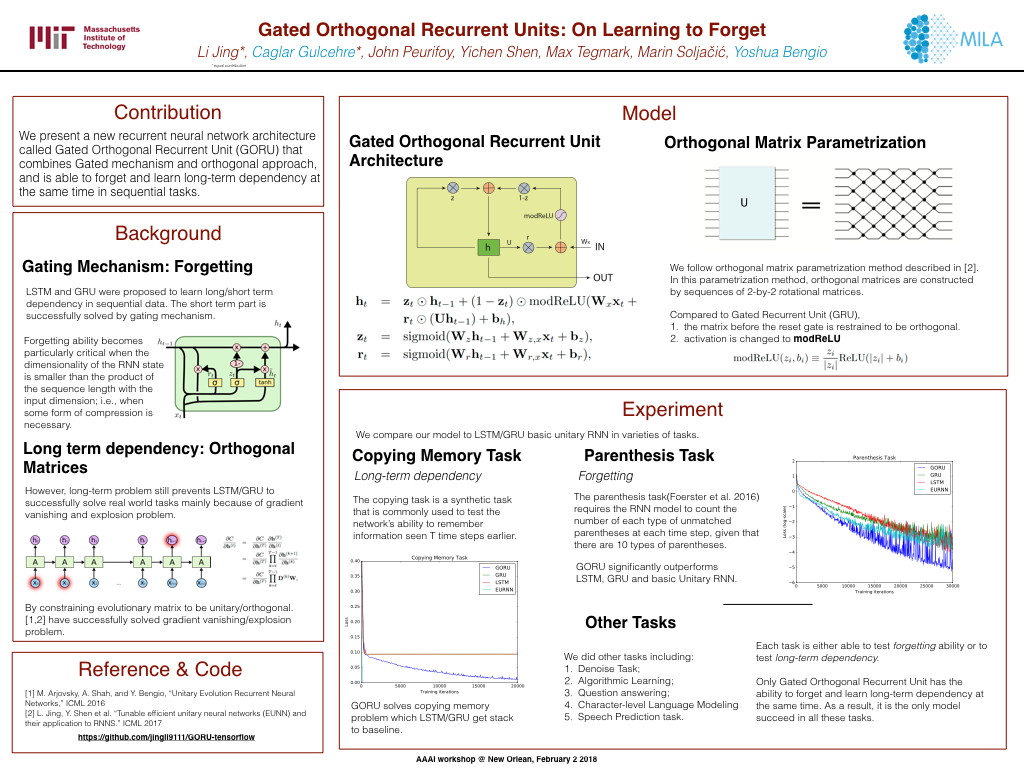

We present a novel recurrent neural network (RNN) based model that combines the remembering ability of unitary RNNs with the ability of gated RNNs to effectively forget redundant/irrelevant information in its memory. We achieve this by extending unitary RNNs with a gating mechanism. Our model is able to outperform LSTMs, GRUs and Unitary RNNs on several long-term dependency benchmark tasks. We empirically both show the orthogonal/unitary RNNs lack the ability to forget and also the ability of GORU to simultaneously remember long term dependencies while forgetting irrelevant information. This plays an important role in recurrent neural networks. We provide competitive results along with an analysis of our model on many natural sequential tasks including the bAbI Question Answering, TIMIT speech spectrum prediction, Penn TreeBank, and synthetic tasks that involve long-term dependencies such as algorithmic, parenthesis, denoising and copying tasks.

Citation

Bibilographic information

Li Jing*, Caglar Gulcehre*, John Peurifoy, Yichen Shen, Max Tegmark, Marin Soljacic, Yoshua Bengio "Gated Orthogonal Recurrent Units: On Learning to Forget." Neural Computation 31 (4), 765–783 (2019)

@inproceedings{jing2017b,title={Gated Orthogonal Recurrent Units: On Learning to Forget},

author={Jing, Li and Gulcehre, Caglar and Peurifoy, John Shen, Yichen and Tegmark, Max and Soljacic, Marin and Bengio, Yoshua},

journal={Neural Computation},

issue={4},

volume={31},

page={765-783}

year={2019}

}