About

I am a PhD candidate at MIT's EECS department, working on machine learning with Professor Marin Soljačić. I obtained my BSc in math and physics from MIT. I am a student at IAIFI.

News

3/14/2022: I will be participating at MIT x Japan's Innovation Discovery Japan and will be a student leader for the visit of SONY & Sony Interactive Entertainment.

1/31/2022: Our SkyRadar solution won the best pitch at MEMSI by offering a new digital or "phygital" Airport City experience. Read more about our experience on MIT News. Let's chat about innovation!

Research Interests

- machine learning, artificial intelligence, natural language processing, computer vision

- self-supervised learning, representation learning

- AI for science, AI hardware/ software co-design

Selected Publications

Learning with less labels

|

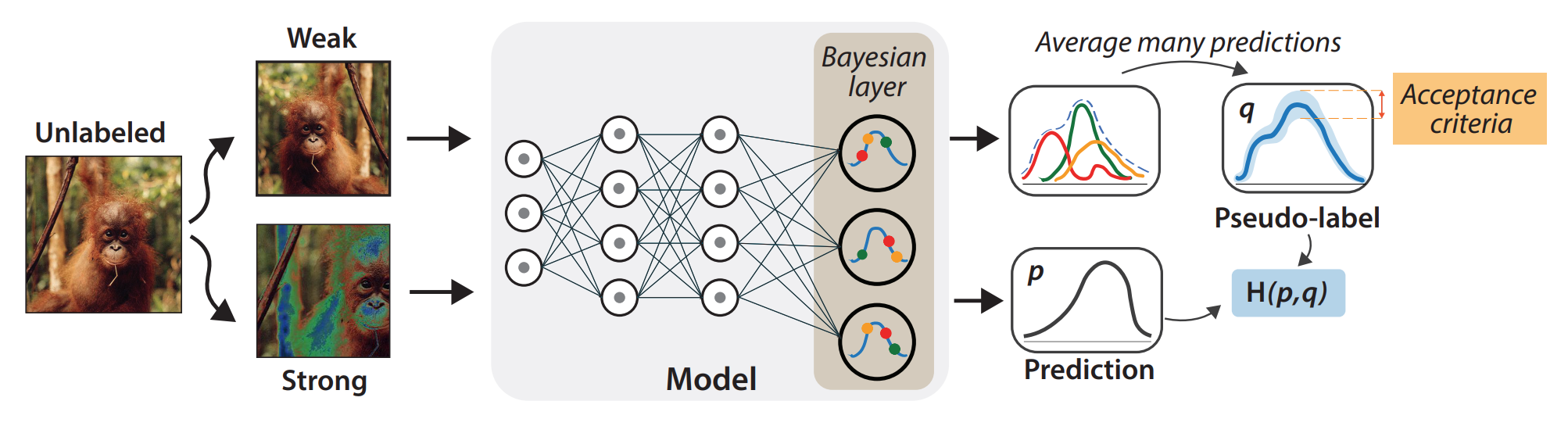

On the Importance of Calibration in Semi-supervised LearningCharlotte Loh, Rumen Dangovski, Shivchander Sudalairaj, Seungwook Han, Ligong Han, Leonid Karlinsky, Marin Soljacic, Akash SrivastavaPreprint. Under review., 2022paper / bibtex / Demonstating that calibration is important for semi-supervised learning, and a new method improving the state-of-the-art. |

|

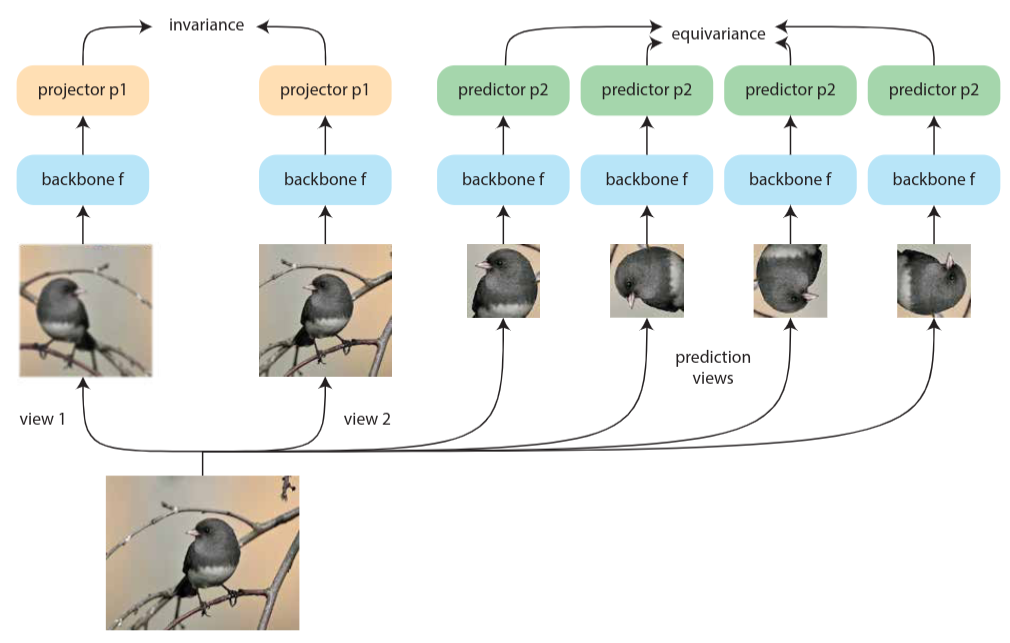

Equivariant Contrastive LearningRumen Dangovski, Li Jing, Charlotte Loh, Seungwook Han, Akash Srivastava, Brian Cheung, Pulkit Agrawal, Marin SoljacicICLR, 2022paper / bibtex / code / blog / talkMethod revealing the complementary nature of invariance and equivariance in contrastive learning. |

|

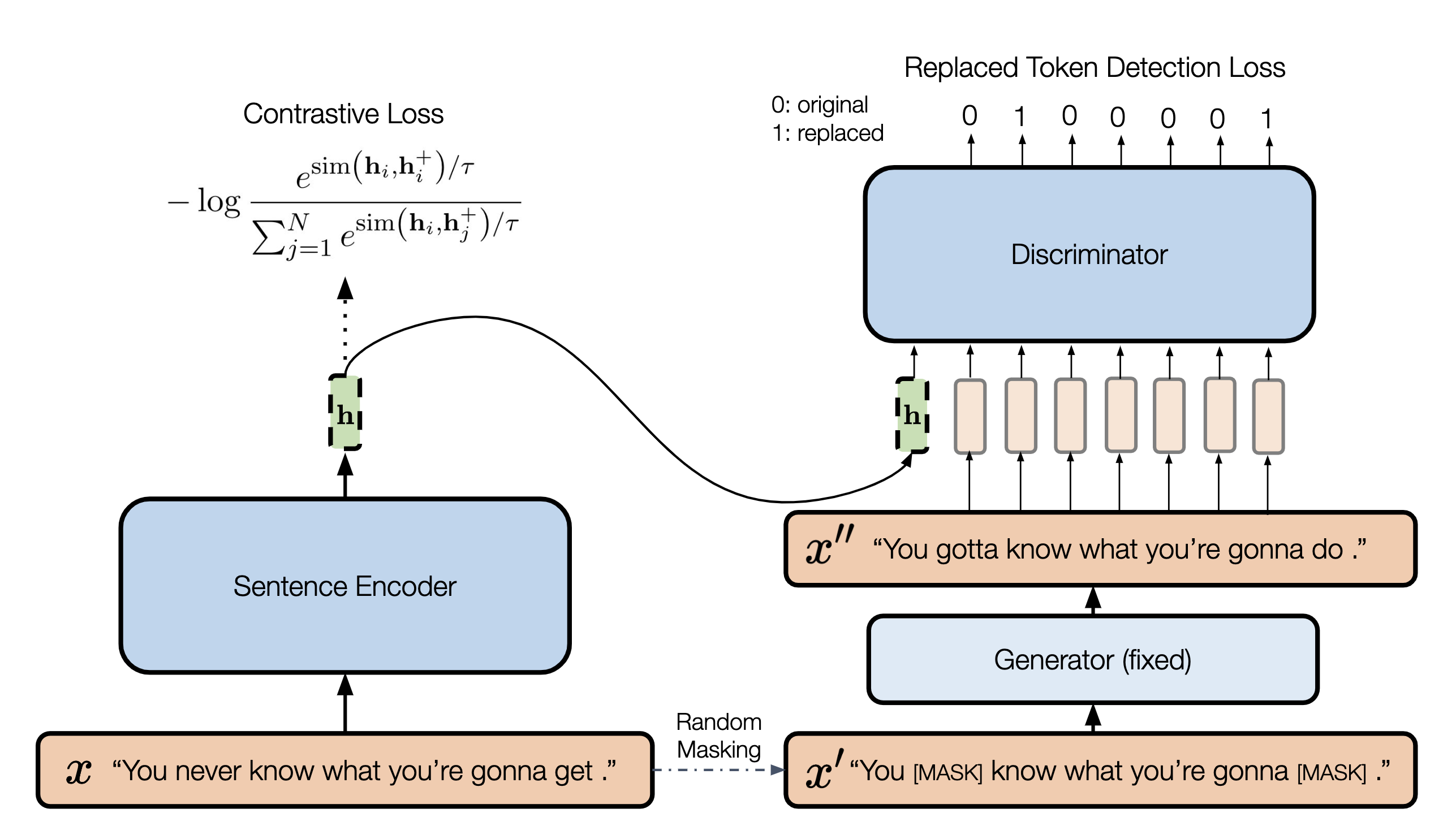

DiffCSE: Difference-based Contrastive Learning for Sentence EmbeddingsYung-Sung Chuang, Rumen Dangovski, Hongyin Luo, Yang Zhang, Shiyu Chang, Marin Soljacic, Shang-Wen Li, Wen-tau Yih, Yoon Kim, James GlassNAACL, 2022paper / bibtex / codeEquivariant Contrastive Learning contributes to state-of-the-art results among unsupervised sentence representation learning methods. |

|

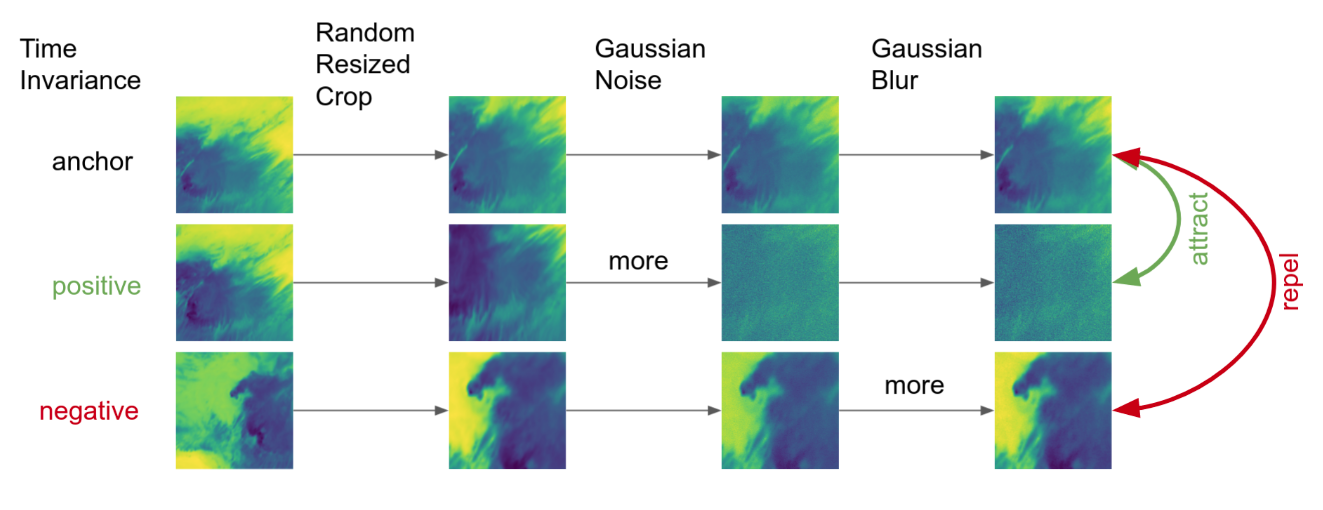

Meta-Learning and Self-Supervised Pretraining for Storm Event Imagery TranslationIleana Rugina*, Rumen Dangovski*, Mark Veillette, Pooya Khorrami, Brian Cheung, Olga Simek, Marin Soljacic,ICLR AI for Earth and Space Science Workshop, 2022paper / bibtex / codeNovel few-shot multi-task learning benchmark for image-to-image translation with meta-learning and self-supervsied learning baselines. |

|

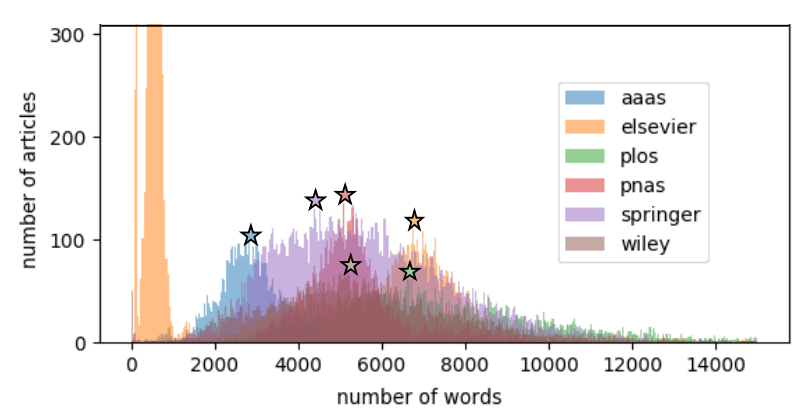

We Can Explain Your Research in Layman’s Terms: Towards Automating Science Journalism at ScaleRumen Dangovski, Michelle Shen, Dawson Byrd, Li Jing, Desislava Tsvetkova, Preslav Nakov, Marin SoljacicAAAI, 2021paper / bibtexApplication automating science journalism at scale as a neural abstractive summarization task. Application constrained by little labeled pairs (scientific paper, press release). |

|

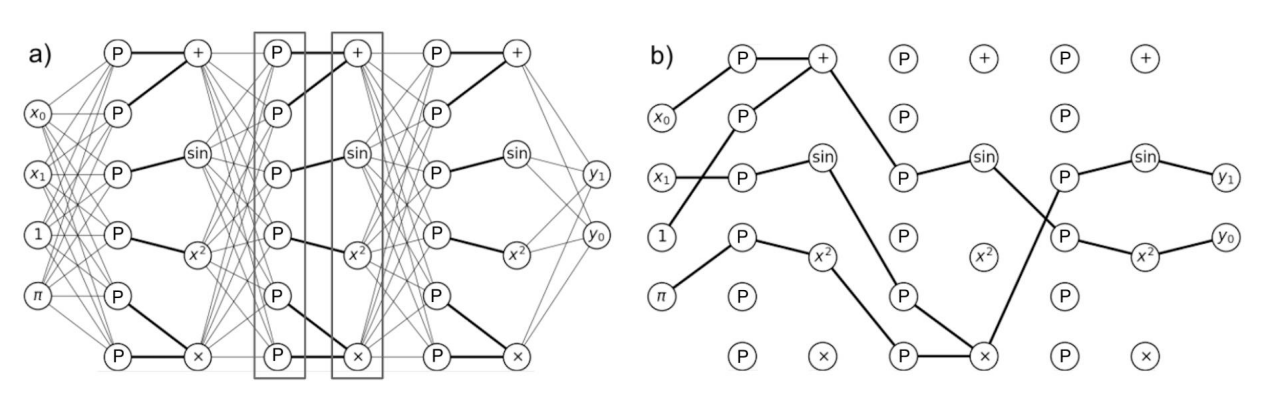

Fast Neural Models for Symbolic Regression at ScaleAllan Costa*, Rumen Dangovski*, Owen Dugan, Samuel Kim, Pawan Goyal, Joseph Jacobson, Marin Soljacic,Preprint. Under review., 2020paper / bibtex / codeNovel neuro-symbolic method for fast symbolic regression at scale. |

|

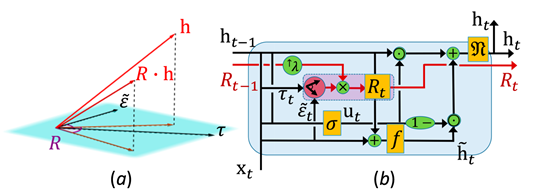

Rotational Unit of Memory: A Novel Representation Unit for RNNs with Scalable ApplicationsRumen Dangovski*, Li Jing*, Preslav Nakov, Mico Tatalovic, Marin SoljacicTACL (presented at NAACL), 2019paper / bibtex / code / blogNovel recurrent unit with improved long-term and associative memory. |

AI for Science

|

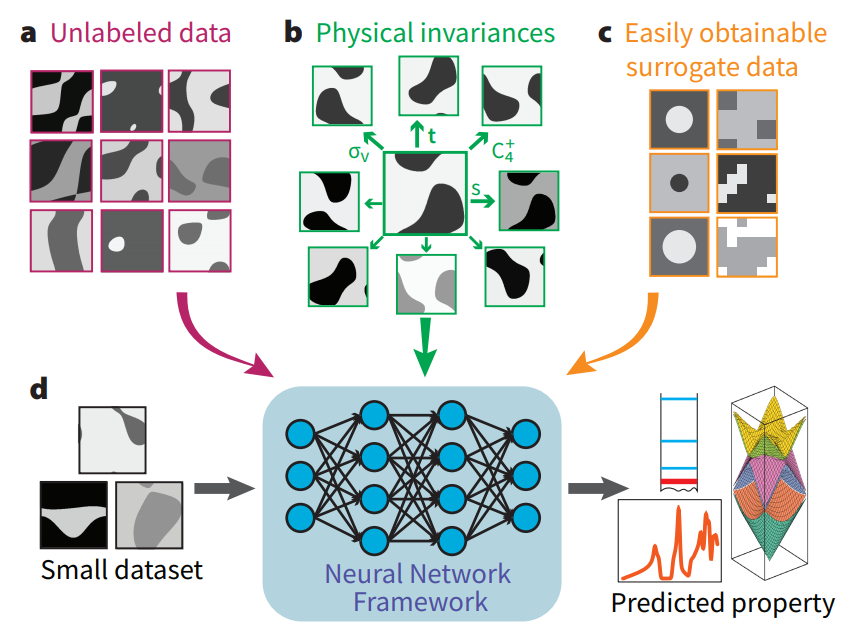

Surrogate-and invariance-boosted contrastive learning for data-scarce applications in scienceCharlotte Loh, Thomas Christensen, Rumen Dangovski, Samuel Kim, Marin SoljacicNature Communications, 2022paper / bibtex / codeMaking applications in science less "hungry" for data. |

|

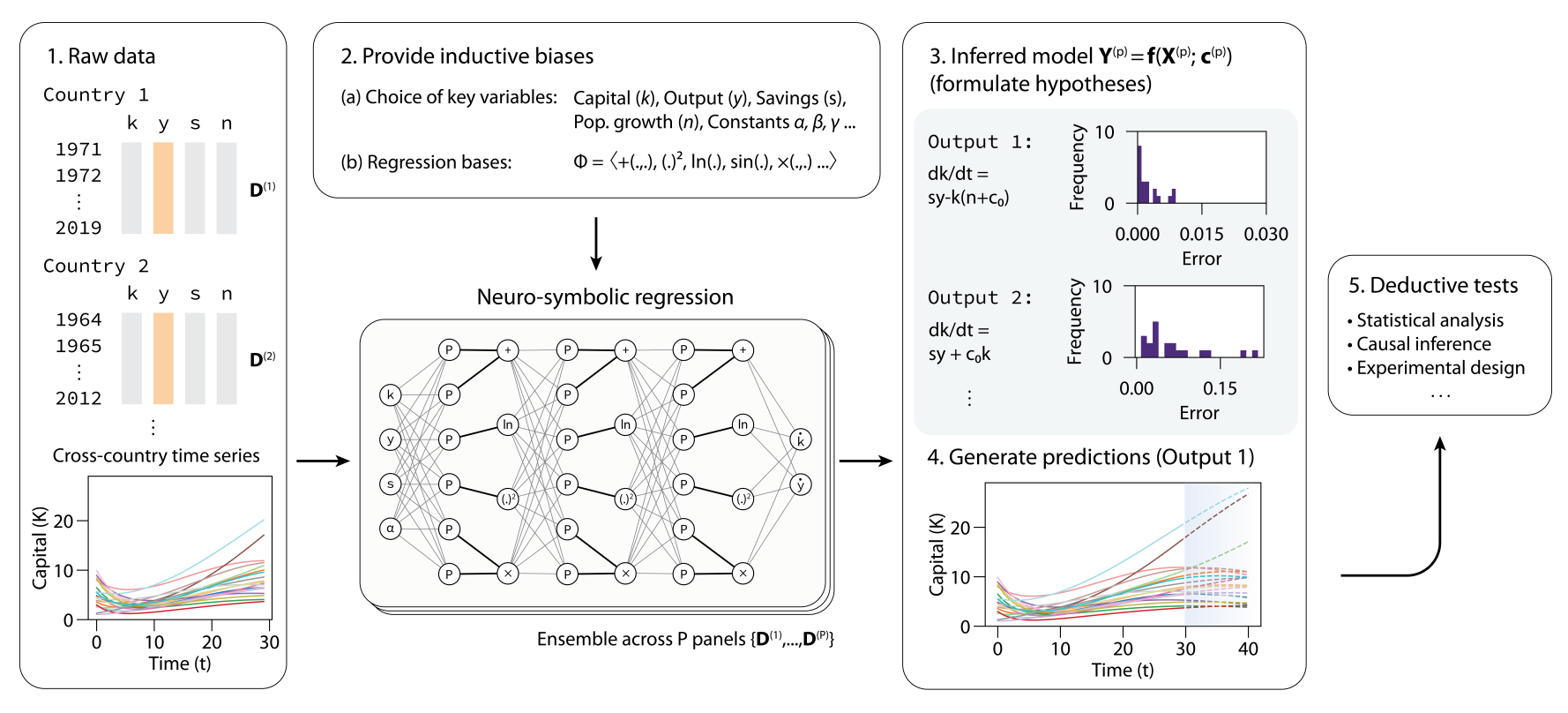

AI-Assisted Discovery of Quantitative and Formal Models in Social ScienceJulia Balla, Sihao Huang, Owen Dugan, Rumen Dangovski, Marin Soljacic,Preprint. Under review., 2022paper / bibtex / codeDiscovering laws in social science using OccamNet, our neuro-symbolic method. |

|

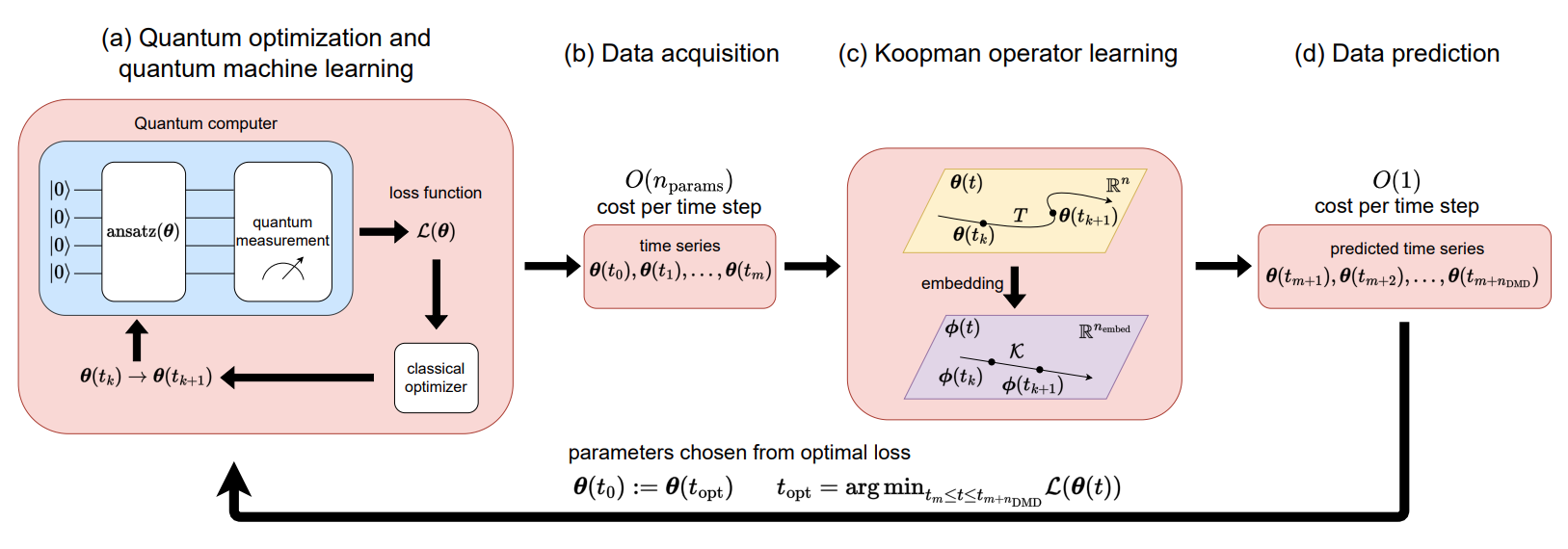

Koopman Operator learning for Accelerating Quantum Optimization and Machine LearningDi Luo, Jiayu Shen, Rumen Dangovski, Marin Soljacic,Preprint. Under review., 2022paper / bibtexAccelerating quantum optimization and quantum machine learning with Koopman operator learning. |

|

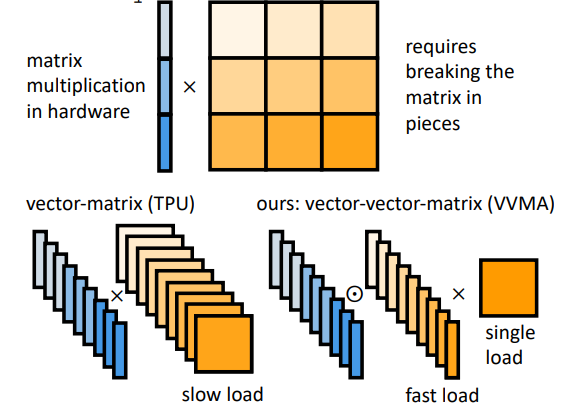

Vector-Vector-Matrix Architecture: A Novel Hardware-Aware Framework for Low-Latency Inference in NLP ApplicationsMatthew Khoury*, Rumen Dangovski*, Longwu Ou, Preslav Nakov, Yichen Shen, Li JingEMNLP, 2020paper / bibtexNovel architecture for vector-matrix multiplication. |

|

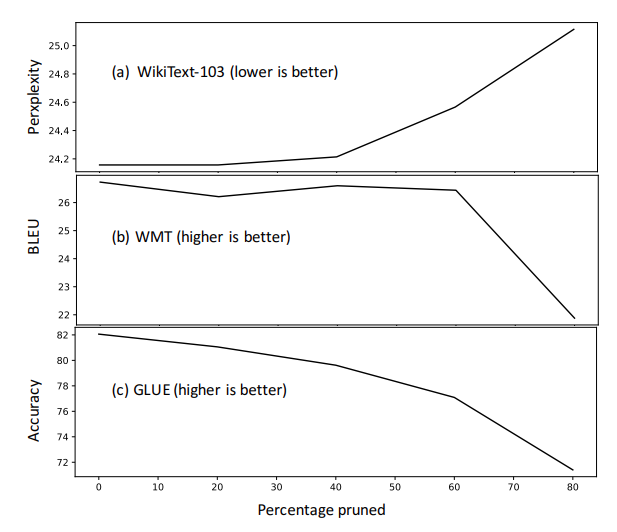

Data-Informed Global Sparseness in Attention Mechanisms for Deep Neural NetworksIleana Rugina*, Rumen Dangovski*, Li Jing, Preslav Nakov, Marin Soljacic,Preprint. Under review., 2020paper / bibtex / codeData-informed attention pruning. |