Abstract

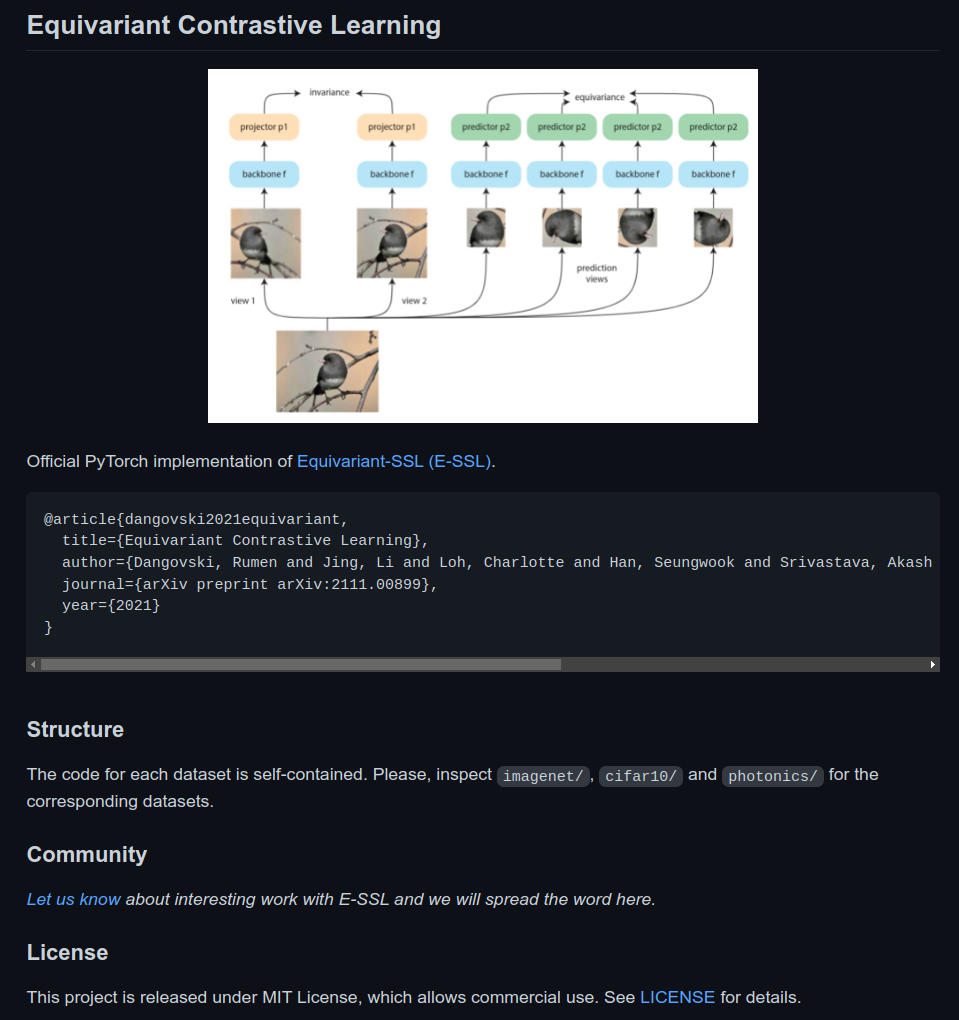

In state-of-the-art self-supervised learning (SSL) pre-training produces semantically good representations by encouraging them to be invariant under meaningful transformations prescribed from human knowledge. In fact, the property of invariance is a trivial instance of a broader class called equivariance, which can be intuitively understood as the property that representations transform according to the way the inputs transform. Here, we show that rather than using only invariance, pre-training that encourages non-trivial equivariance to some transformations, while maintaining invariance to other transformations, can be used to improve the semantic quality of representations. Specifically, we extend popular SSL methods to a more general framework which we name Equivariant Self-Supervised Learning (E-SSL). In E-SSL, a simple additional pre-training objective encourages equivariance by predicting the transformations applied to the input. We demonstrate E-SSL’s effectiveness empirically on several popular computer vision benchmarks, e.g. improving SimCLR to 72.5\% linear probe accuracy on ImageNet. Furthermore, we demonstrate usefulness of E-SSL for applications beyond computer vision; in particular, we show its utility on regression problems in photonics science. Our code, datasets and pre-trained models are available at this link to aid further research in E-SSL.

Citation

@article{dangovski2021equivariant,title={Equivariant Contrastive Learning},

author={Dangovski, Rumen and Jing, Li and Loh, Charlotte and Han, Seungwook and Srivastava, Akash and Cheung, Brian and Agrawal, Pulkit and Solja{\v{c}}i{\'c}, Marin},

journal={arXiv preprint arXiv:2111.00899},

year={2021}

}